Solar’s development – stalled by Reagan in the US and underinvestment in Europe – offers lessons for today’s policymakers in other technologies

Photo: Dennis Schroeder, Wikimedia Commons.

If there’s a good news climate change story right now, one to counteract the grim daily scroll of reports about wildfires, droughts, dying coral reefs, and deadly heatwaves, it’s the stunning rise of solar power.

With cheap panels now springing up on Texas ranches, Chinese lakes, and German balconies, graphs showing solar’s takeoff resemble the “hockey stick” charts used by Al Gore to warn about global warming in the first place. Under the right conditions, solar is now “the cheapest source of electricity in history”, the International Energy Agency (IEA) has declared.

Trend lines point to an astonishing shift in the world’s energy mix. A recent report by the Economist – a solar sceptic only a decade ago - predicts it will be the biggest global source of electricity by the mid-2030s, and possibly the biggest for energy overall a decade later.

On one level, this is a success story – a technology riding to the rescue as the world smoulders. But delve deeper into the history of solar, and a much more unsettling picture emerges.

Even in the 1970s, some policymakers knew solar was on track to become competitive with fossil fuels, given enough investment. But in the US, vital funding programmes were cut, and in Europe, ambitious recommendations weren’t taken up, leaving solar to flounder for a decade or more in the 1980s and 1990s, say experts who have studied its development. Since 2000, the German, and then Chinese solar industry has plunged into the gap. But overall, global procrastination has hurt everybody.

“We could have done this sooner,” said Sugandha Srivastav, an energy innovation economist at the University of Oxford, who has asked these “what if” questions about solar power.

This is not just an exercise in hindsight and regret. The missed opportunities in solar provide lessons for policymakers today, on the need to rapidly scale up particularly promising new technologies to drive down costs, and to gather much better data on how emerging technologies are performing. This cautionary tale has attracted intensive academic study as the climate has worsened and need for renewables has risen. Here, drawing on that research, we offer a close look at this sorry history.

“For me, one of the big lessons from solar is it took way too long to use as a model for other technologies,” says Greg Nemet, a professor at the University of Wisconsin–Madison who has written a widely cited book on this subject, How Solar Energy Became Cheap. “The question of how to speed it up is certainly hugely important.”

The origins of solar

The history of solar power stretches back into the 19th century, with the discovery of the photovoltaic effect – the generation of electricity from exposure to light – by the French physicist Edmond Becquerel in 1837. By the end of the century, American inventors were already patenting early designs for panels. And in the 1930s, Siemens invented a copper-based cell; it had grand designs – shelved by World War II - to carpet the Sahara Desert with solar panels and send electricity back to Europe. But the birth of modern solar panels came in the 1950s at the US’s legendary Bell Labs. Scientists at Bell found silicon produced a much more efficient panel, and amid excitable headlines about a new era of energy, solar gradually crept into practical applications, powering the new generation of satellites, for example.

Prices continued to fall as these niche uses expanded, and in the 1970s, world events conspired to make the case for solar urgent. The 1973 oil shock sent the western world on a desperate hunt for new sources of energy. Even after the immediate shortages subsided, there was a belief that the world was simply running out of oil.

Meanwhile, stirrings of worry about the impact of pumping so much carbon dioxide into the atmosphere began to reach official circles. In 1977, the chief scientific adviser to the new US president, Jimmy Carter, warned him that the impact could be “catastrophic” warming and crop failure, and urged a rapid shift away from fossil fuels.

Carter publicly embraced solar, installing thermal panels on the roof of the White House. More substantially, his administration continued and expanded the so-called “Block Buy” programme, which bought photovoltaic panels from manufacturers at increasingly tough price and efficiency points.

This programme can be seen as an early example of what’s now called an advance market commitment (AMC), the idea that the government makes a new technology viable by promising to buy it at a certain price point – from whichever company can deliver - driving down costs. This approach doesn’t just apply to green energy. In recent years, AMCs have been touted as a way to create everything from tools that can remove carbon dioxide from the air, to low carbon concrete, to broad spectrum antimicrobial drugs that could prove vital in a pandemic.

Down the learning curve

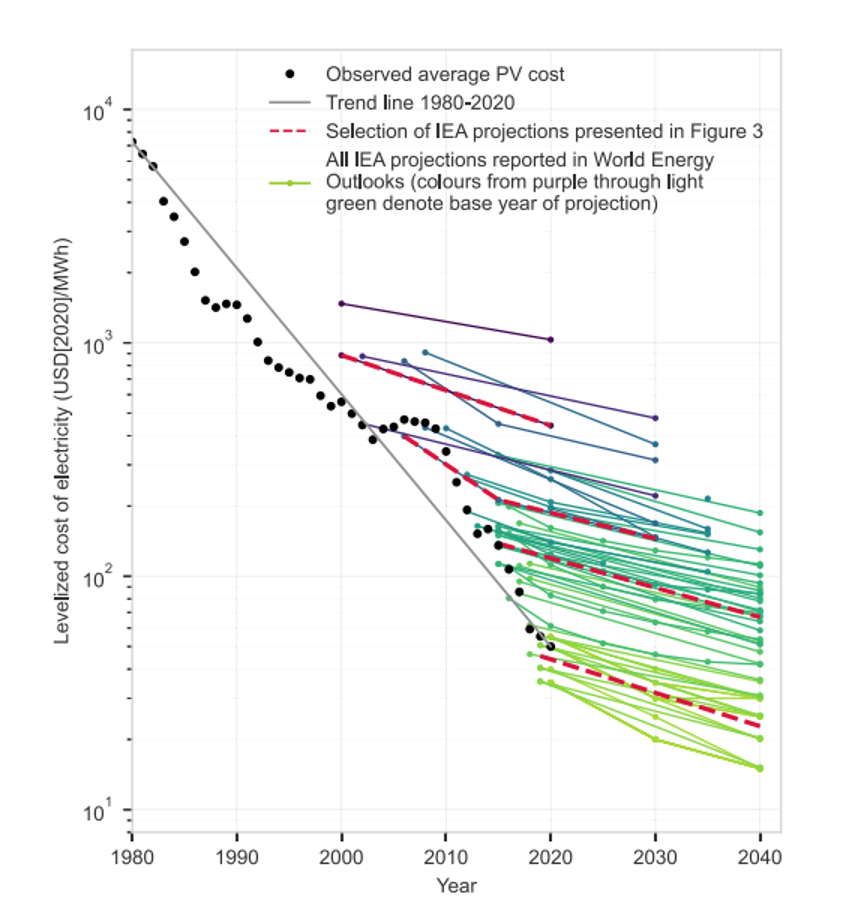

According to Nemet’s research, this and related programmes were working: solar module costs dropped fivefold from 1974 to 1981, a pace of improvement only matched again after 2010. The more panels were manufactured, the more costs came down as, for example, processes were fine-tuned, bottlenecks overcome, new machinery invented and supply chains developed. This process is often called an “experience curve” or a “learning curve”.

Paul Maycock, a US official who was one of the driving forces behind the Block Buy programme, had seen this learning curve concept play out when he worked in the semiconductor industry, and in 1975 plotted a remarkably prescient graph that showed how costs had fallen as more solar was manufactured, and would continue to fall to become competitive with fossil fuels if the industry was scaled up. And it wasn’t only Maycock making these predictions; other academics and entrepreneurs in the 1970s were observing or assuming that solar was following a learning curve towards affordability.

“We could have realised that easily in 1970,” said Doyne Farmer, an economist at the University of Oxford who was one of the first to correctly predict solar would become cheaper than coal. “We could have allocated our resources differently [to accelerate solar down the learning curve],” he said, although it “would have required really planning ahead.”

Reagan pulls the plug

Unfortunately for the budding American solar industry, President Ronald Reagan had other ideas. The US government solar programme – which had sustained the industry – was cut by 90% in the three years after 1981 when Reagan took office. Planned, bigger new rounds of the Block Buy programme were drastically slashed, and it ended in 1985.

When the cuts hit, Farmer was working at the US’s Los Alamos National Laboratory, which at the time had one of the world’s leading solar research groups. “Ronald Reagan dismantled it as a line item in his budget, because he just didn't like it,” he recalls.

The Reagan administration’s opposition to Carter’s solar programmes, dubbed “solar socialism” by his supporters, was ideological: the government should not be involved in the ‘D’ part of R&D, as this was the private sector’s job. But abandoning government demand creation for solar ignored the recent lessons of the semiconductor industry, which, as Nemet points out, in the 1960s had subsisted largely off US government defence contracts, not broader commercial sales. “That got that technology to scale and improve,” he said.

And it’s not as if panels in the 1980s needed fundamental new breakthroughs to reach today’s efficiencies. As Nemet tells it, the modern solar panel had basically been invented by around 1985 – what was needed was mostly scale up. “There’s been incremental improvements on that since,” he said. But a “dominant design”, as with wind turbines, was already established.

“Probably, all these [solar] advances could have been done well, well earlier,” said Rutger Schlatmann, head of the solar division at the Helmholtz-Zentrum Berlin, which studies climate-friendly technologies. But when the initial shock of the 1973 oil crisis faded, the market for solar dwindled to a “minute” size, he said during a debate last year.

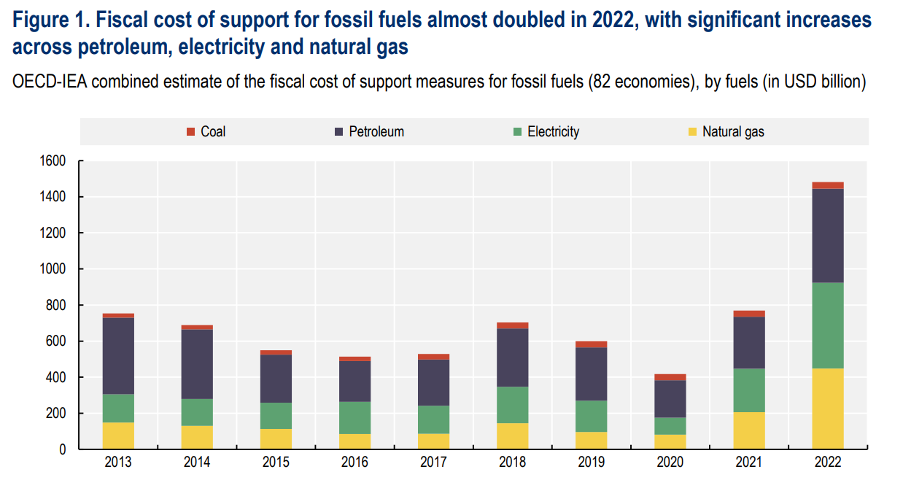

The 1980s also saw fossil fuel companies begin to pour vast sums in marketing, including to sow doubts about the existence of climate change, points out Srivastav, even though some knew internally that it was dangerous. (Meanwhile, huge subsidies for fossil fuels have endured: in 2022, governments still spent an estimated $1.4 trillion propping them up, the Organisation for Economic Co-operation and Development has estimated.)

Meanwhile in Europe

Around the same time that Maycock observed that in the US solar was following a learning curve towards competitiveness, European officials were noticing something similar.

In 1977, Wolfgang Palz, who would lead the European Commission’s solar R&D efforts for the next two decades, also enthused about dramatic drops in prices, and the potential for more, as manufacturing scaled up. Even then, there were no fundamental technological obstacles, he recalls. “It could be done. But you need money to invest.” In a book published that year, he predicted that with enough encouragement, “cost-effective central power plants can be expected in the next 10 to 15 years”.

However, in the mid-1970s, despite a few demonstration projects, research on photovoltaics in Europe was minimal compared to the US. Although the European Community started its first comprehensive energy R&D programme in 1975, including solar, “the very optimistic attitude of the USA is not necessarily shared in all European countries,” an assessment of the programme later concluded.

‘No major technical obstacles’

However, Palz believed in solar, and pushed for a much bigger investment to drive it down the learning curve. Carter’s Block Buy scheme and broader solar R&D push in the US had drastically cut module costs, he pointed out at the time.

In a report published in 1983, he argued that government intervention was needed to break a “vicious circle”, whereby private players refused to invest, because solar still wasn’t widely commercially viable. State action would drive down the price until it was. Enormous amounts of money were needed, Palz concluded: 400-750 million European Currency Units (ECUs) – equivalent to the modern-day euro – to finance massive new panel factories, and a fund of at least 500 million ECUs to subsidise the purchase of photovoltaics.

But while photovoltaics became a staple of European Commission research programmes, the sums invested remained in the low tens of millions of euros a year. Brussels did not have the power to launch a vast demand-creation programme for solar; that power was held by the member states. However, those states did not start to substantially subsidise demand until the turn of the millennium, when they launched a wave of feed-in tariffs. There was a “missing market for photovoltaic systems” during the 1990s, one Commission assessment found.

It was not the urgent, big bazooka approach Palz had demanded. He recalls that in the 1970s and 1980s, the consensus was that nuclear – both fission and fusion – was seen as the energy of the future. “Nuclear was always our enemy,” he said. This was also true in the Commission’s research directorate, where Palz’s solar group was “tolerated” but never fully embraced, he recalls.

Japan takes the lead

The 1980s and 1990s wasn’t a period of no progress for solar, far from it. There were city-scale installation programmes in Germany, for example; and Japan started a rooftop subsidy scheme in the mid 1990s. By 2000 the country was installing more than 15,000 systems a year, wrenching the global lead from the US.

But the world could have moved so much faster. In the two decades from 1981, installed solar capacity globally increased by more than 100-fold, driving down module prices by 79%. Yet in the next two decades capacity multiplied more than 600-fold, and prices dropped by 96%. Panel prices are now so low that the bulk of solar costs stem so-called “soft costs”, like permitting and labour.

As solar was rolled out, prices dropped. But progress in the 1980s and 1990s was relatively slow. Source.

Germany, then China

In the 2000s, Japan passed the baton to Germany, which launched an unprecedented feed-in tariff for renewables that led to a boom in installations, growing the world market 30-fold, and turning the country into a manufacturing leader. Spain launched its own feed-in tariff, and in the late 2000s was second only to Germany in installations. In 2001, the EU released the first of several directives encouraging renewables.

However, in the early 2010s, a new coalition government of conservatives and free market liberals wound down Germany’s subsidises, helping to bankrupt some leading German solar firms, like the once dominant Q-Cells.

Germany had dropped the solar baton. It was picked up by Chinese entrepreneurs, who were faster than the Germans at hiring and scaling up production. They also benefited from China’s own feed-in tariff introduced in 2011, from the country’s experience at mass production and from an influx of talented Chinese-Australian solar scientists. China wrested almost total control of solar panel manufacturing, which it holds to this day – to the chagrin of EU leaders worried about energy security.

Lessons for today

What does this history lesson teach current day policymakers?

Deciding which technologies to back through top down research funding, procurement and prizes is the perennial headache of grant officers and politicians from Brussels to Beijing.

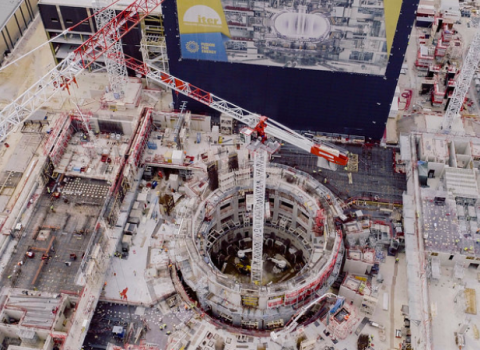

“Ex-ante you really don't know,” acknowledges Nemet. The EU has bet big on hydrogen, for example, but there are questions over whether it has been overhyped. And witness the endless delays and budget wranglings over the International Thermonuclear Experimental Reactor, a mega-project in the south of France which has now consumed tens of billions of euros – but has still provided no certainty over if and when nuclear fusion will deliver commercial electricity.

Despite the unknowability of the future, says Nemet, the story of solar makes it clear that a certain class of technologies – wind turbines, batteries for electric vehicles, and increasingly heat pumps, for example – follow learning curves like solar, meaning that the more you build, the cheaper they get. They are typically modular, consisting of many small units, produced in a controlled environment, and with a settled, dominant design so manufacturers aren’t constantly having to tinker.

“The moment you know a technology has this really steep learning effect, going all in and capitalising on that effect is what you should be doing,” said Srivastav.

Are we on the curve?

Determining whether a technology is indeed following a learning curve isn’t simple, of course. Looking at a graph, it might look like increasing production of a technology is driving down costs. But there might be some other factor, like company R&D or an advance in basic science, that is actually propelling new efficiencies instead, or in addition.

In other words, what might be needed is not just scale-up but a fundamental breakthrough, or some other factor; otherwise the technology might hit a wall.

What’s more, with solar, it was straightforward to monitor its progress, because it’s reasonably easy to track the cost of a watt of electricity, for example. But for pre-commercial technologies like nuclear fusion, which isn’t selling electricity to anyone yet, this gets trickier. Instead, with fusion as an example, you’d need to measure another metric, like the ratio of energy in, to energy out, in fusion experiments, Farmer suggests. “You look at those metrics, and you look at how they've behaved through time. And it's generally not bad to just assume that they keep doing through time what they have they're doing,” he said.

He thinks it will take in the order of 50 years to achieve commercial fusion power, “and by then we're going to have other ways of dealing with climate change.”

Where’s the data?

Still, although an imperfect science, good data on technology progress is seen as crucial to finding the best candidates for acceleration. It’s therefore a near scandal that we have such sporadic data, argues Farmer, who has tried and failed to win grant money to do this kind of tracking. “We would save massive quantities of money by understanding this and investing more wisely,” he said. “You are talking hundreds of billions, if not trillions, that we could save.”

When Farmer made his prediction in 2010 that solar would become cheaper than coal in a decade’s time, people thought it was a “whacky prediction, because it was out of line with what everyone else was saying.” The problem is that “people just were not aware that […] the cost of some technologies drops dramatically in time. The cost of other technologies don’t. It’s a very technology-specific thing,” he argued.

“Humans are really bad at understanding exponential patterns,” said Srivastav. “Why were the solar forecasts wrong? Over and over?”

Hiding information

Another problem is that private companies developing early stage technologies often conceal their true costs from the public, as this is considered proprietary information, making tracking price reductions tricky, points out Nemet. “Companies should be super open about their data, because, in a sense, taxpayers or ratepayers own that information. They paid for it,” he says, referring to companies that receive state support.

There have been some attempts to systematically collect technology tracking data. The Santa Fe Institute, a US private research organisation, created a performance curve database in 2009, tracking the progress of myriad technologies, but it hasn’t been updated since. Nemet and his research team have, however, managed to win European Research Council funding for a new project tracking the adoption of new technologies. Cost data should be added next year. So with better data, we’d have more of shot at working out which of today’s technologies are the solars of tomorrow – on the path to affordability - and which resemble nuclear fission, doomed to stay on the expensive side.

“We're making decisions with not great information,” warned Nemet. “It's a really crucial issue”.

What politicians do with this data is another question – like Reagan, they might choose to ignore it. But at least we’d have a better chance of not repeating the delays to solar that have cost the earth dear.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.