MEPs will soon have to take a position on the Commission’s proposal for new artificial intelligence rules. If too onerous, they will hamper future uses and restrict Europe’s competitiveness, experts say

EU funding for artificial intelligence (AI) should focus on creating one big research infrastructure, and upcoming rules for the sector should be kept to a minimum, a hearing on the rapidly evolving technology heard on Monday.

The European Commission will unveil first-of-its-kind AI regulations next month, requiring "high-risk" AI systems to meet minimum standards of trustworthiness. Officials say the rules will tread the fine line of respecting “European values,” including privacy and human rights, without hampering innovation.

Along with new rules, the EU will back “targeted investment into lighthouses of AI research”, according to Khalil Rouhana, deputy director general of DG Connect.

Rouhana expects up to €135 billion from the EU’s COVID-19 recovery plan will be invested in digital infrastructure and schemes across Europe in coming years, with a large amount of this money – he hopes – devoted to AI projects.

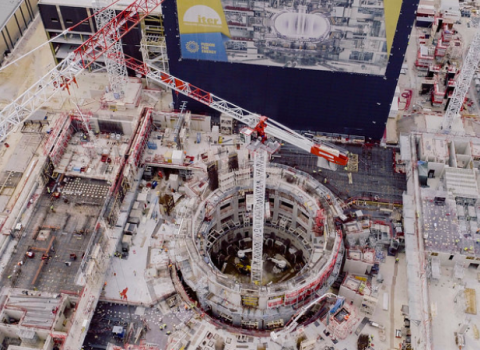

But he was told by tech-minded MEPs and experts at the European Parliament session that the EU should invest more strategically in AI, creating a central laboratory modelled on CERN in Geneva, Switzerland, which has put Europe at the forefront of particle physics research.

“I believe we should commit to a CERN for AI, and locate it in the most competitive AI region of Europe,” said Sweden’s Jörgen Warborn, centre-right MEP with the European People's Party.

More could be achieved by concentrating money, rather than doling it out to separate initiatives, Warborn said, noting Europe has only a handful of the world’s top AI companies.

“We have a big problem when it comes to competitiveness,” he said. “We’re lagging behind. The US invests €25 billion per year in AI, China €10 billion, and Europe roughly €2 billion.”

Marina Geymonat, the head of artificial intelligence at Telecom Italia Group in Rome, agreed, saying the EU should, “Put all our money on one horse, and try and make [something] that enables all our companies to be joined together in a supranational body.”

Similarly, Volker Markl, chair of database systems and information management at TU Berlin and director of the Berlin Big Data Centre, disagrees with the traditional EU strategy of creating de-centralised research hubs. “We’re creating things with lots of stakeholders, like [the cloud computing initiative] GAIA-X,” said Markl. “But de-centralisation doesn’t allow us to reach economies of scale, which means digital sovereignty becomes hard to reach.”

European approach

Rouhana talked up “the European approach” to ethical AI, as a third way which offers an alternative to both US laissez faire and Chinese authoritarian approaches to the technology. The EU’s AI policy will be a template for other countries to follow, he said. “Europe is known for having trustful services. That’s our brand and we need to develop it.”

The AI rules are part of a Brussels push to wield greater influence over the world’s emerging technologies and stopping European companies “falling behind the digital superpowers,” said Dragoş Tudorache, a liberal member of the European Parliament and chair of its committee on AI in the digital age.

But there is concern legislation will have the opposite effect, stopping creativity in its tracks. Brussels has increasingly set the standard on global technology policy, but its privacy rules for consumer data, the General Data Protection regulation (GDPR), run counter to the “creative experimentation” AI needs, in the view of Sebastian Wieczorek, vice president of artificial intelligence technology at Europe’s biggest software company, and the largest listed company in Germany, SAP.

“There is no data that doesn’t potentially contain personal information. With GDPR, you have to define a purpose for using data, and delete it when the purpose is completed or not there. This is contrary to innovation,” Wieczorek said.

Markl agreed, warning that EU data protection rules involve a “slow and complex” process for researchers and companies. He highlighted recent experience with COVID-19 track and trace apps. “Privacy prevented the app from being [truly] effective in Germany,” he said.

GDPR rules “don’t always make sense; in some places, they can be a hurdle,” agreed German MEP Axel Voss of the European Peoples’ Party.

For Wieczorek, Europe should support the creation of privacy-sensitive AI, like controversial facial recognition technology. There are concerns about the technology, which uses surveillance cameras, computer vision and predictive imaging to monitor people in public places, and there have been calls for its use to be banned in the EU.

Company concerns

MEPs heard from participants who, like Wieczorek, are concerned that Brussels will over-legislate.

“Companies fear the regulation,” said Kay Firth-Butterfield, a lawyer who is head of artificial intelligence and machine learning at the World Economic Forum.

She pointed out the US, as world AI leader, is choosing instead to draw up voluntary guidelines shaped in part by industry. Representative Robin Kelly, who has championed a US national strategy on AI, asked her European counterparts this month to adopt rules that are “narrow and flexible”.

Liberal MEP Nicola Beer said rules had to be flexible. “Companies needed space to try new things,” she said.

“Most of our companies are SMEs and you have to let them grow,” agreed Kristi Talving, deputy secretary general for business environment at the Estonian Ministry of Economic Affairs and Communications.

Rouhana acknowledged the risk of smothering the AI industry, but said upcoming rules for the technology will be “proportionate” and “reassure companies and citizens” without “over-burden businesses.”

In Voss’s view, AI systems need easier access to personalised date, in order to cancel out algorithmic biases.

Supporters of regulation say proper human oversight is needed for a technology that presents new risks to individual privacy and livelihoods. “If bias goes in, bias comes out,” said Moojan Asghari, co-founder of Women in AI, a networking group.

Accidentally destroying the world

The EU’s AI proposal is expected home in on riskier uses of the technology, such as in healthcare and self-driving cars, and how those will come under tougher scrutiny. In 2020, the European Commission presented its AI white paper, which likened the current situation to "the Wild West".

According to Jaan Tallinn, founding engineer of video call app Skype, who is an investor in some 150 start-ups and a past board member of UK AI pioneer DeepMind, the EU should “differentiate AI dealing with people and AI dealing with objects.”

Tallinn took part in expert group that helped shape the EU’s AI policy. Among the group members there was “a stark distinction between people who are concerned about AI now and people who are thinking about what will come. These are two groups that should be cooperating. One of the big challenges is to bring them together,” he said.

As co-founder of the Cambridge Centre for the Study of Existential Risk and Future of Life Institute, Tallinn claims to have heard all the dystopian possibilities for AI. “If someone accidentally destroys the world in the next few years, I will probably personally know them, he said.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.