The Dutch university has opted out of the Times Higher Education league table this year, adding further momentum to the research assessment reform movement that is pushing for universities and researchers to be judged on quality not quantity

The Times Higher Education World University Rankings list published in September had a notable absence: a regular in the top 100, Utrecht University had withdrawn, citing concerns about the rankings’ emphasis on scoring and competition.

The move comes at a time when there are a growing number of questions about the data used to draw up university league tables. In Europe in particular, momentum has gathered behind assessing researchers more on quality than quantity.

For Utrecht University, withdrawing from the THE rankings is less as a battle cry for reform and more a natural move in line with the university’s values. That does not mean it would be unhappy if other universities followed suit, though.

“Of course, we would like to see other institutions follow this,” said Jeroen Bosman, Utrecht’s open science specialist and faculty liaison for the Faculty of Geosciences. “But also, we want to have a good discussion about if we should still use rankings, about the data behind the rankings, if there are better alternatives,” he said.

Bosman’s thread on social media explaining the university’s decision to withdraw from the rankings has been shared over 2,000 times since it was posted on 1 October. Comments have been largely supportive. “You see a lot of reactions from around the world from people who are really having problems with the extent to which rankings almost dictate what institutions should do and how they should behave,” Bosman said.

Utrecht University’s withdrawal from the THE world rankings list comes after talks with a national expert group in the Netherlands on the use of rankings and signing up to More Than Our Rank, a movement aimed at rethinking the use of rankings.

While the THE ranking relies on universities providing the required data, other global rankings draw up their lists without necessarily relying on input from the universities. Part of Utrecht University’s rationale for pulling out of THE’s list is that the effort needed to supply THE with the data is not justified because the methodology is not in line with the university’s core values.

A critique of global rankings

Universities use rankings to attract students or find collaborators; governments cite them to encourage talent to their country or region, and researchers look to them when considering career options.

But there are questions over the validity of the data used to compile rankings and whether the information supplied by universities is entirely accurate. The ranking organisations also rarely make public the algorithms used to draw up their lists, raising transparency issues.

There is also potential for institutions to game the system by changing minor elements that carry a lot of weight in the ranking metrics. For example, hiring one well-cited professor could boost a university’s ranking without improving quality overall. In France, universities have been criticised for merging faculties and research institutes to boost their rankings.

Another problem is a perceived anglophone bias, with the top 20 of most rankings dominated by US and UK universities.

“Of course, a ranking is a competitive list,” said THE’s chief global affairs officer, Phil Baty. “But universities have thrived for centuries on competition, academics thrive on competition, competitive research funding, competitive grant funding, it’s all part and parcel of the higher education sector, which is healthy.”

Baty said THE also works as a collaborative tool, bringing universities together at annual summits and providing data that helps institutions find relevant partners. Plus, the fact that rankings are dominated by western institutions is beginning to change.

“We've seen Chinese universities rocketing up the rankings over the past two decades. We now have Chinese universities at number 12 and 14. The National University of Singapore is at 19. We're seeing India now as the fourth best represented country in the world,” Baty said. “Yes, the rankings still favour a western elite […]. But we're seeing change and we're genuinely monitoring real world change in global higher education.”

For Baty, there is no perfect model, but he does not see the sector completely moving away from metrics-based rankings. Instead, more emphasis will be placed on using data to get a broader picture of a university’s quality – something Baty says THE is doing by breaking down the numbers on its website.

Baty is keen for the Utrecht University to return to the ranking. “We want to provide a true picture of the world of higher education at the moment,” he said. “Utrecht University not participating is ensuring we're not representing the Netherlands as clearly as we could. We'd love to have a dialogue with them about whether they come back in.”

Research assessment reforms

The debate on university rankings in Europe is linked to moves to reform how research and researchers are assessed. Last year in July, an agreement was drafted with the goal of placing less emphasis on metrics such as number of papers published and impact factors. The reform is spearheaded by the European University Association (EUA), the funding agencies represented by Science Europe and by the European Commission.

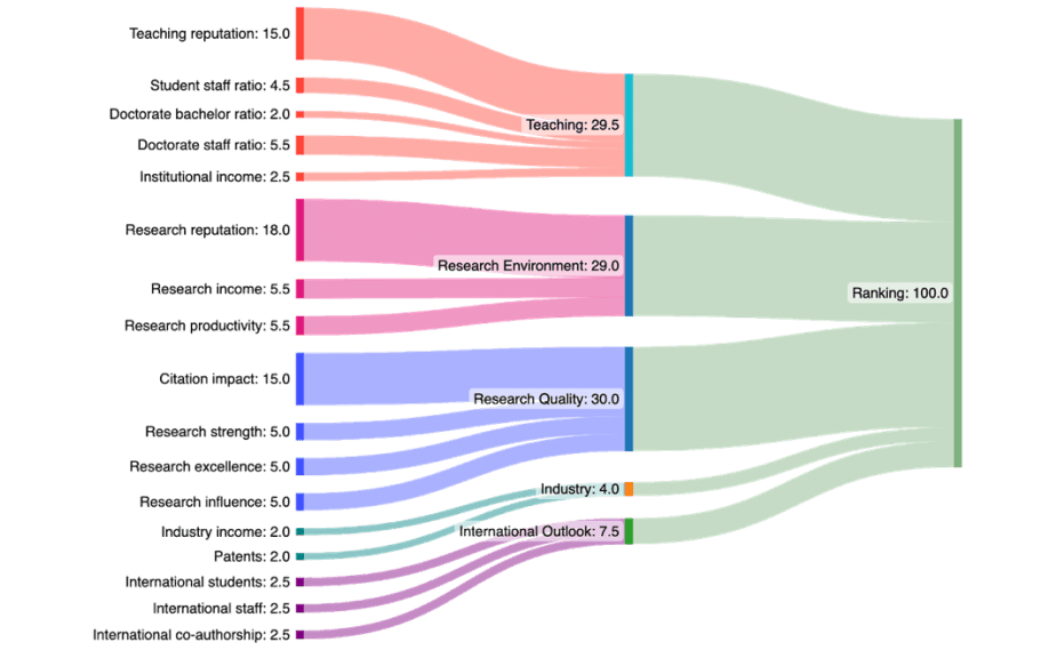

Quantitative metrics are commonly used by global ranking organisations. For example, research quality – measuring citation impact, research strength, excellence and influence – makes up 30% of a university’s score in THE World University Ranking.

To date, 637 organisations have signed the reform agreement and the Coalition for Advancing Research Assessment (CoARA) has formed around it. While this builds on the Declaration on Research Assessment (DORA) agreed in 2012, the CoARA agreement puts more emphasis on action.

“There has been a lot of criticism of DORA - for universities just signing it but doing nothing after that moment of signing,” Bosman said. “That is quite likely different with CoARA because it's built into the signing that you commit to actions and to being part of workshops and to sharing your practices of implementing the ideas of CoARA.”

Monika Steinel, deputy secretary general of the EUA said, “I would be happy if movements such as CoARA led to a reflection on the methodology [of rankings] and to questions about the kind of data that's collected. But I wouldn't want to speculate on whether that is likely to happen. I would be happy if it did,” she said.

Similarly, some universities might feel inspired by Utrecht University’s decision. “I think that would be positive, if only because it shows us that there are different ways of thinking about rankings and different ways of using rankings in Europe,” said Steinel. “But whether this is the beginning of a trend, I wouldn't be able to say.”

Lidia Borrell-Damián, secretary general of Science Europe says things are starting to change, and CoARA is an example of that. “I think that the advantage of this momentum is that research is just one of the aspects in society that is evolving,” Borrell-Damián said. “There is a lot more governance involving citizens, there is a lot more dialogue in society, there is a plea for equity in education, there is a plea for equity and social opportunities […]. We are not working in isolation in a world that is not having these discussions elsewhere.”

Editor’s note: This article was updated 17 October 2023 to specify that THE does not use journal impact factors.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.

A unique international forum for public research organisations and companies to connect their external engagement with strategic interests around their R&D system.